The other week I mentioned that I’d been playing around with the idea of a virtual JDBC driver for Essbase. Much to my amazement, quite a few people (relatively speaking…) expressed an interest in this project and even graciously offered to help test it.

To reiterate from last week, I still think that Vess is “interesting”. It has also been one of the more complicated things I have tried to create. Implementing such a thing is really kind of an exercise in pounding a round peg into a square whole (or pounding a cube into a cylinder…), requiring advanced knowledge of Java, the JDBC driver model, Essbase, the Essbase Java API, network programming, and more. I’m leveraging code from a half dozen projects I have written, some public and some not.

That said if you wondered why Oracle wasn’t nice enough to make a true JDBC driver for Essbase, it’s because you have to be a little crazy to do it. And apparently I’m just that right kind of masochistic crazy.

Anyway, the driver works – amazingly. It’s not production ready and won’t be for some time, but you can view your substitution variables from a table and even load data to a cube by doing a normal SQL INSERT.

In fact, you can even read a CSV file on the fly and insert it to a cube with no load rule, like this:

INSERT INTO VESS_SCHEMA.SAMPLE_BASIC_DATA ("Year", "Measures", "Product", "Market", "Scenario", "DATA")

SELECT * FROM CSVREAD('/Users/jasonwjones/test/sample_basic_data.txt');

Crazy, eh? So all you load rule haters rejoice.

You can even use SQL transformations to adjust the data and load that into a cube from a remote JDBC table:

INSERT INTO VESS_SCHEMA.DRILLING_DRILLING_DATA ("Years", "Time", "Location", "Account", "DATA")

SELECT 'FY' || RIGHT(FISCAL_YEAR, 2) AS Years, 'Jan' AS Time, DIVISION || '-' || LOCATION AS LOCATION, '0' || ACCOUNT, AMOUNT FROM TRANS_SMALL;

What next?

A lot of the hard stuff is in place so the name of the game now would be to iterate and start to introduce a few more table representations of Essbase concepts, such as cube stats, server sessions, and some other interesting things. I’m still contemplating how you could layer in the outline editing model in a sensible way.

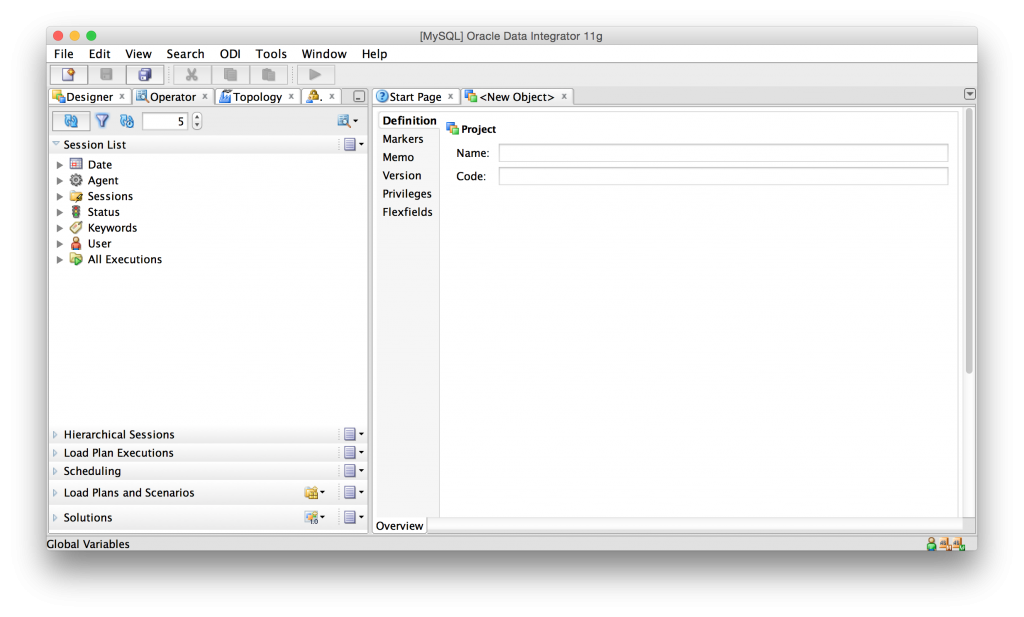

Getting back to this being “interesting” – I think it’s really interesting in a couple of ways. One is the idea of getting to use any off-the-shelf JDBC tool to be able to do things with an Essbase server. The other thing that is interesting is ODI (which I guess is interesting by way of the first reason, since databases/JDBC “fit” into ODI better than OLAP). The way Essbase works in ODI right now is that some custom Java libraries are used and glued together with some Jython scripts and Knowledge Module steps. In theory you could instead drop in the Vess driver as a generic SQL data source and do all of this with vanilla RKMs, LKMs, and IKMs. How cool would that be? To the extent that supporting Hyperion inside of ODI has been “problematic” for Oracle, you could perhaps sidestep it this way.

Anyway, I have a few folks that have valiantly offered to help test this thing out. If you have a spare cube, Java 1.8 (or greater), can download the free Squirrel SQL tool, and want to play with this, please hit me up, I would love some feedback.